Blog

- Home

- Blog

Understanding Bayesian A/B testing (using baseball statistics)

Written by Sayed Jamal MirkamaliWell, Mike Piazza has a slightly higher career batting average (2127 hits / 6911 at-bats = 0.308) than Hank Aaron (3771 hits / 12364 at-bats = 0.305). But can we say with confidence that his skill is actually higher, or is it possible he just got lucky a bit more often?

In this series of posts about an empirical Bayesian approach to batting statistics, we’ve been estimating batting averages by modeling them as a binomial distribution with a beta prior. But we’ve been looking at a single batter at a time. What if we want to compare two batters, give a probability that one is better than the other, and estimate by how much?

This is a topic rather relevant to my own work and to the data science field, because understanding the difference between two proportions is important in A/B testing. One of the most common examples of A/B testing is comparing clickthrough rates (“out of X impressions, there have been Y clicks”)- which on the surface is similar to our batting average estimation problem (“out of X at-bats, there have been Y hits””).1

Here, we’re going to look at an empirical Bayesian approach to comparing two batters.2 We’ll define the problem in terms of the difference between each batter’s posterior distribution, and look at four mathematical and computational strategies we can use to resolve this question. While we’re focusing on baseball here, remember that similar strategies apply to A/B testing, and indeed to many Bayesian models.

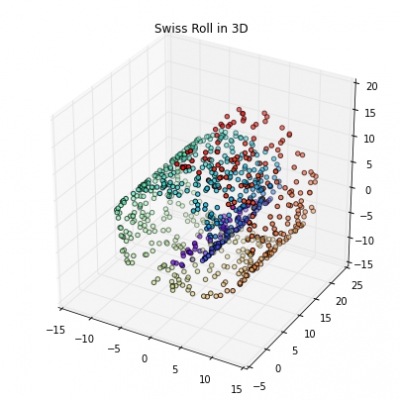

Python - Kernel tricks and nonlinear dimensionality reduction via RBF kernel PCA

Written by Sayed Jamal MirkamaliMost machine learning algorithms have been developed and statistically validated for linearly separable data. Popular examples are linear classifiers like Support Vector Machines (SVMs) or the (standard) Principal Component Analysis (PCA) for dimensionality reduction. However, most real world data requires nonlinear methods in order to perform tasks that involve the analysis and discovery of patterns successfully.

The focus of this article is to briefly introduce the idea of kernel methods and to implement a Gaussian radius basis function (RBF) kernel that is used to perform nonlinear dimensionality reduction via BF kernel principal component analysis (kPCA).

Machine Learning is dead - Long live machine learning!

Written by Sayed Jamal MirkamaliYou may be thinking that this title makes no sense at all. ML, AI, ANN and Deep learning have made it into the everyday lexicon and here I am, proclaiming that ML is dead. Well, here is what I mean…

The open sourcing of entire ML frameworks marks the end of a phase of rapid development of tools, and thus marks the death of ML as we have known it so far. The next phase will be marked with ubiquitous application of these tools into software applications. And that is how ML will live forever, because it will seamlessly and inextricably integrate into our lives.

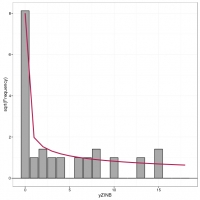

Rootograms, A new way to assess count models

Written by Sayed Jamal MirkamaliAssessing the fit of a count regression model is not necessarily a straightforward enterprise; often we just look at residuals, which invariably contain patterns of some form due to the discrete nature of the observations, or we plot observed versus fitted values as a scatter plot. Recently, while perusing the latest statistics offerings on ArXiv I came across Kleiber and Zeileis (2016) who propose the rootogram as an improved approach to the assessment of fit of a count regression model. The paper is illustrated using R and the authors’ countreg package (currently on R-Forge only). Here, I thought I’d take a quick look at the rootogram with some simulated species abundance data.

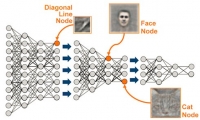

The concept is certainly compelling. Having a machine capable of reacting to real-world visual, auditory or other type of data and then responding, in an intelligent way, has been the stuff of science fiction until very recently. We are now on the verge of this new reality with little general understanding of what it is that artificial intelligence, convolutional neural networks, and deep learning can (and can’t) do, nor what it takes to make them work. At the simplest level, much of the current efforts around deep learning involve very rapid recognition and classification of objects—whether visual, audible, or some other form of digital data. Using cameras, microphones and other types of sensors, data is input into a system that contains a multi-level set of filters that provide increasingly detailed levels of differentiation. Think of it like the animal or plant classification charts from your grammar school days: Kingdom, Phylum, Class, Order, Family, Genus, Species.

Search

Archive

Latest Posts

Latest Comments

K2 Content

-

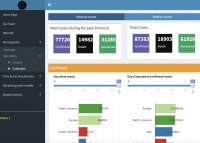

A synergetic R-Shiny portal for modeling and tracking of COVID-19 data

Written by S. Morteza NajibiWritten on Friday, 08 January 2021 07:03 in SDAT News Read 4897 times Read more...

A synergetic R-Shiny portal for modeling and tracking of COVID-19 data

Written by S. Morteza NajibiWritten on Friday, 08 January 2021 07:03 in SDAT News Read 4897 times Read more...

Dr. Mahdi Salehi, an associate member of SDAT and assistant professor of statistics at the University of Neyshabur, introduced a useful online interactive dashboard that visualize and follows confirmed cases of COVID-19 in real-time. The dashboard was publicly made available on 6 April 2020 to illustrate the counts of confirmed cases, deaths, and recoveries of COVID-19 at the level of country or continent. This dashboard is intended as a user-friendly dashboard for researchers as well as the general public to track the COVID-19 pandemic, and is generated from trusted data sources and built-in open-source R software (Shiny in particular); ensuring a high sense of transparency and reproducibility.

Access the shiny dashboard: https://mahdisalehi.shinyapps.io/Covid19Dashboard/

-

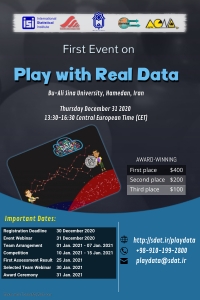

First Event on Play with Real Data

Written by S. Morteza NajibiWritten on Wednesday, 23 December 2020 13:45 in SDAT News Read 5170 times Read more...

First Event on Play with Real Data

Written by S. Morteza NajibiWritten on Wednesday, 23 December 2020 13:45 in SDAT News Read 5170 times Read more...

Scientific Data Analysis Team (SDAT) intends to organize the first event on the value of data to provide data holders and data analyzers with an opportunity to extract maximum value from their data. This event is organized by International Statistical Institute (ISI) and SDAT hosted at the Bu-Ali Sina University, Hamedan, Iran.

Organizers and the data providers will provide more information about the goals of the initial ideas, team arrangement, competition processes, and the benefits of attending this event on a webinar hosted at the ISI Gotowebianr system. Everyone invites to participate in this webinar for free, but it is needed to register at the webinar system by 30 December 2020.

Event Time: 31 December 2020 - 13:30-16:30 Central European Time (CET)

Register for the webinar: https://register.gotowebinar.com/register/8913834636664974352

More details about this event: http://sdat.ir/en/playdata

Aims and outputs:

• Playing with real data by explorative and predictive data analysis techniques

• A platform between a limited number of data providers and hundreds to thousands of data scientist Teams

• Improving creativity and scientific reasoning of data scientist and statisticians

• Finding the possible “bugs” with the current data analysis methods and new developments

• Learn different views about a dataset.AWARD-WINNING:

The best-report awards consist of a cash prize:

$400 for first place,

$200 for second place, and

$100 for third place.Important Dates:

Event Webinar: 31 December 2020 - 13:30-16:30 Central European Time (CET).

Team Arrangement: 01 Jan. 2021 - 07 Jan. 2021

Competition: 10 Jan. 2021 - 15 Jan. 2021

First Assessment Result: 25 Jan. 2021

Selected Teams Webinar: 30 Jan. 2021

Award Ceremony: 31 Jan. 2021Please share this event with your colleagues, students, and data analyzers.

-

Development of Neuroimaging Symposium and Advanced fMRI Data Analysis

Written by S. Morteza NajibiWritten on Sunday, 21 April 2019 12:18 in SDAT News Read 5314 times Read more...

Development of Neuroimaging Symposium and Advanced fMRI Data Analysis

Written by S. Morteza NajibiWritten on Sunday, 21 April 2019 12:18 in SDAT News Read 5314 times Read more...

The Developement of Structural and Functional Neuroimaging Symposium hold at the School of Sciences, Shiraz University in April 17 2019. The Advanced fMRI Data Analysis Workshop also held in April 18-19 2019. For more information please visit: http://sdat.ir/dns98

-

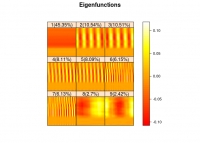

Releasing Rfssa Package by SDAT Members at CRAN

Written by S. Morteza NajibiWritten on Sunday, 03 March 2019 21:03 in SDAT News Read 3851 times

Releasing Rfssa Package by SDAT Members at CRAN

Written by S. Morteza NajibiWritten on Sunday, 03 March 2019 21:03 in SDAT News Read 3851 times

The Rfssa package is available at CRAN. Dr. Hossein Haghbin and Dr. Seyed Morteza Najibi (SDAT Members) have published this package to provide the collections of necessary functions to implement Functional Singular Spectrum Analysis (FSSA) for analysing Functional Time Series (FTS). FSSA is a novel non-parametric method to perform decomposition and reconstruction of FTS. For more information please visit github homepage of package.

-

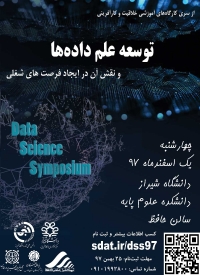

Data Science Symposium

Written by S. Morteza NajibiWritten on Friday, 01 February 2019 00:13 in SDAT News Read 5349 times Read more...

Data Science Symposium

Written by S. Morteza NajibiWritten on Friday, 01 February 2019 00:13 in SDAT News Read 5349 times Read more...

Symposium of Data Science Developement and its job opportunities hold at the Faculty of Science, Shiraz University in Feb 20 2019. For more information please visit: http://sdat.ir/dss97

Tags

About Us

SDAT is an abbreviation for Scientific Data Analysis Team. It consists of groups who are specialists in various fields of data sciences including Statistical Analytics, Business Analytics, Big Data Analytics and Health Analytics.

Get In Touch

Address: No.15 13th West Street, North Sarrafan, Apt. No. 1 Saadat Abad- Tehran

Phone: +98-910-199-2800

Email: info@sdat.ir

Login Form

Joomla! Debug Console

{

"__default": {

"session": {

"counter": 2,

"timer": {

"start": 1752540238,

"last": 1752540238,

"now": 1752540238

},

"client": {

"forwarded": "10.3.88.68"

},

"token": "OZtCFtC3CRUnFOCU3QXRRzdKRsNBIETp"

},

"registry": {},

"user": {

"id": 0,

"name": null,

"username": null,

"email": null,

"password": null,

"password_clear": "",

"block": null,

"sendEmail": 0,

"registerDate": null,

"lastvisitDate": null,

"activation": null,

"params": null,

"groups": [],

"guest": 1,

"lastResetTime": null,

"resetCount": null,

"requireReset": null,

"aid": 0

},

"plg_system_languagefilter": {

"language": "en-GB"

}

}

}

Time

Memory

184 Queries Logged 150.01 ms

42 duplicate found!

- Query Time: 0.56 ms Query memory: 0.005 MB Memory before query: 4.859 MB Rows returned: 7

SELECT id, rules

FROM `w0v41_viewlevels`id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_viewlevels NULL ALL NULL NO INDEX KEY COULD BE USED NULL NULL 7 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 10 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1506 9 JDatabaseDriver->loadAssocList() JROOT/libraries/src/Access/Access.php:1063 8 Joomla\CMS\Access\Access::getAuthorisedViewLevels() JROOT/libraries/src/User/User.php:458 7 Joomla\CMS\User\User->getAuthorisedViewLevels() JROOT/libraries/src/Plugin/PluginHelper.php:318 6 Joomla\CMS\Plugin\PluginHelper::load() JROOT/libraries/src/Plugin/PluginHelper.php:87 5 Joomla\CMS\Plugin\PluginHelper::getPlugin() JROOT/libraries/src/Plugin/PluginHelper.php:129 4 Joomla\CMS\Plugin\PluginHelper::isEnabled() JROOT/libraries/src/Application/SiteApplication.php:604 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.58 ms After last query: 0.12 ms Query memory: 0.005 MB Memory before query: 4.873 MB Rows returned: 2

SELECT b.id

FROM w0v41_usergroups AS a

LEFT JOIN w0v41_usergroups AS b

ON b.lft <= a.lft

AND b.rgt >= a.rgt

WHERE a.id = 9id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE a NULL const PRIMARY PRIMARY 4 const 1 100.00 NULL 1 SIMPLE b NULL range idx_usergroup_nested_set_lookup idx_usergroup_nested_set_lookup 4 NULL 2 100.00 Using where; Using index No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 11 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1550 10 JDatabaseDriver->loadColumn() JROOT/libraries/src/Access/Access.php:980 9 Joomla\CMS\Access\Access::getGroupsByUser() JROOT/libraries/src/Access/Access.php:1095 8 Joomla\CMS\Access\Access::getAuthorisedViewLevels() JROOT/libraries/src/User/User.php:458 7 Joomla\CMS\User\User->getAuthorisedViewLevels() JROOT/libraries/src/Plugin/PluginHelper.php:318 6 Joomla\CMS\Plugin\PluginHelper::load() JROOT/libraries/src/Plugin/PluginHelper.php:87 5 Joomla\CMS\Plugin\PluginHelper::getPlugin() JROOT/libraries/src/Plugin/PluginHelper.php:129 4 Joomla\CMS\Plugin\PluginHelper::isEnabled() JROOT/libraries/src/Application/SiteApplication.php:604 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.68 ms After last query: 57.44 ms Query memory: 0.005 MB Memory before query: 7.509 MB Rows returned: 1

SELECT `name`

FROM `w0v41_extensions`

WHERE `type` = 'package'

AND `element` = 'pkg_eventgallery_full'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL ref element_clientid,element_folder_clientid,extension element_clientid 402 const 1 10.00 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 10 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 9 JDatabaseDriver->loadResult() JROOT/administrator/components/com_eventgallery/version.php:24 8 include_once JROOT/administrator/components/com_eventgallery/version.php JROOT/plugins/system/picasaupdater/picasaupdater.php:32 7 plgSystemPicasaupdater->__construct() JROOT/libraries/src/Plugin/PluginHelper.php:280 6 Joomla\CMS\Plugin\PluginHelper::import() JROOT/libraries/src/Plugin/PluginHelper.php:182 5 Joomla\CMS\Plugin\PluginHelper::importPlugin() JROOT/libraries/src/Application/CMSApplication.php:667 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.62 ms After last query: 26.89 ms Query memory: 0.007 MB Memory before query: 8.263 MB Rows returned: 1Duplicate queries: #5

SELECT template

FROM w0v41_template_styles as s

WHERE s.client_id = 0

AND s.home = 1id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE s NULL ref idx_client_id,idx_client_id_home idx_client_id 1 const 6 12.50 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 14 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 13 JDatabaseDriver->loadResult() JROOT/libraries/rokcommon/RokCommon/PlatformInfo/Joomla.php:31 12 RokCommon_PlatformInfo_Joomla->getDefaultTemplate() JROOT/libraries/rokcommon/RokCommon/PlatformInfo/Joomla.php:105 11 RokCommon_PlatformInfo_Joomla->setPlatformParameters() JROOT/libraries/rokcommon/RokCommon/Service.php:71 10 RokCommon_Service::getContainer() JROOT/libraries/rokcommon/include.php:38 9 require_once JROOT/libraries/rokcommon/include.php JROOT/plugins/system/rokcommon/rokcommon.php:95 8 plgSystemRokCommon->loadCommonLib() JROOT/plugins/system/rokcommon/rokcommon.php:53 7 plgSystemRokCommon->__construct() JROOT/libraries/src/Plugin/PluginHelper.php:280 6 Joomla\CMS\Plugin\PluginHelper::import() JROOT/libraries/src/Plugin/PluginHelper.php:182 5 Joomla\CMS\Plugin\PluginHelper::importPlugin() JROOT/libraries/src/Application/CMSApplication.php:667 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.50 ms After last query: 0.09 ms Query memory: 0.007 MB Memory before query: 8.270 MB Rows returned: 1Duplicate queries: #4

SELECT template

FROM w0v41_template_styles as s

WHERE s.client_id = 0

AND s.home = 1id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE s NULL ref idx_client_id,idx_client_id_home idx_client_id 1 const 6 12.50 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 15 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 14 JDatabaseDriver->loadResult() JROOT/libraries/rokcommon/RokCommon/PlatformInfo/Joomla.php:31 13 RokCommon_PlatformInfo_Joomla->getDefaultTemplate() JROOT/libraries/rokcommon/RokCommon/PlatformInfo/Joomla.php:67 12 RokCommon_PlatformInfo_Joomla->getDefaultTemplatePath() JROOT/libraries/rokcommon/RokCommon/PlatformInfo/Joomla.php:106 11 RokCommon_PlatformInfo_Joomla->setPlatformParameters() JROOT/libraries/rokcommon/RokCommon/Service.php:71 10 RokCommon_Service::getContainer() JROOT/libraries/rokcommon/include.php:38 9 require_once JROOT/libraries/rokcommon/include.php JROOT/plugins/system/rokcommon/rokcommon.php:95 8 plgSystemRokCommon->loadCommonLib() JROOT/plugins/system/rokcommon/rokcommon.php:53 7 plgSystemRokCommon->__construct() JROOT/libraries/src/Plugin/PluginHelper.php:280 6 Joomla\CMS\Plugin\PluginHelper::import() JROOT/libraries/src/Plugin/PluginHelper.php:182 5 Joomla\CMS\Plugin\PluginHelper::importPlugin() JROOT/libraries/src/Application/CMSApplication.php:667 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.48 ms After last query: 10.22 ms Query memory: 0.005 MB Memory before query: 8.516 MB Rows returned: 2

SELECT extension, file, type

FROM w0v41_rokcommon_configs

ORDER BY priorityid select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_rokcommon_configs NULL ALL NULL NO INDEX KEY COULD BE USED NULL NULL 2 100.00 Using filesort No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 10 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 9 JDatabaseDriver->loadObjectList() JROOT/plugins/system/rokcommon/rokcommon.php:131 8 plgSystemRokCommon->processRegisteredConfigs() JROOT/plugins/system/rokcommon/rokcommon.php:75 7 plgSystemRokCommon->__construct() JROOT/libraries/src/Plugin/PluginHelper.php:280 6 Joomla\CMS\Plugin\PluginHelper::import() JROOT/libraries/src/Plugin/PluginHelper.php:182 5 Joomla\CMS\Plugin\PluginHelper::importPlugin() JROOT/libraries/src/Application/CMSApplication.php:667 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.65 ms After last query: 55.47 ms Query memory: 0.006 MB Memory before query: 12.393 MB Rows returned: 69

SELECT *

FROM w0v41_rsform_configid select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_rsform_config NULL ALL NULL NO INDEX KEY COULD BE USED NULL NULL 69 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 13 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 12 JDatabaseDriver->loadObjectList() JROOT/administrator/components/com_rsform/helpers/config.php:50 11 RSFormProConfig->load() JROOT/administrator/components/com_rsform/helpers/config.php:17 10 RSFormProConfig->__construct() JROOT/administrator/components/com_rsform/helpers/config.php:102 9 RSFormProConfig::getInstance() JROOT/plugins/system/rsformdeletesubmissions/rsformdeletesubmissions.php:28 8 plgSystemRsformdeletesubmissions->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 1.76 ms After last query: 2.53 ms Query memory: 0.007 MB Memory before query: 12.575 MB Rows returned: 20

SHOW FULL COLUMNS

FROM `w0v41_extensions`EXPLAIN not possible on query: SHOW FULL COLUMNS FROM `w0v41_extensions`No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 15 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 14 JDatabaseDriver->loadObjectList() JROOT/libraries/joomla/database/driver/mysqli.php:448 13 JDatabaseDriverMysqli->getTableColumns() JROOT/libraries/src/Table/Table.php:261 12 Joomla\CMS\Table\Table->getFields() JROOT/libraries/src/Table/Table.php:180 11 Joomla\CMS\Table\Table->__construct() JROOT/libraries/src/Table/Extension.php:32 10 Joomla\CMS\Table\Extension->__construct() JROOT/libraries/src/Table/Table.php:328 9 Joomla\CMS\Table\Table::getInstance() JROOT/plugins/system/roksprocket/roksprocket.php:45 8 plgSystemRokSprocket->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.33 ms After last query: 1.22 ms Query memory: 0.005 MB Memory before query: 12.692 MB Rows returned: 1

SELECT `extension_id`

FROM `w0v41_extensions`

WHERE type = 'component'

AND element = 'com_roksprocket'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL ref element_clientid,element_folder_clientid,extension extension 484 const,const 1 100.00 Using index No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 11 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 10 JDatabaseDriver->loadResult() JROOT/libraries/src/Table/Extension.php:124 9 Joomla\CMS\Table\Extension->find() JROOT/plugins/system/roksprocket/roksprocket.php:47 8 plgSystemRokSprocket->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.29 ms After last query: 0.06 ms Query memory: 0.005 MB Memory before query: 12.699 MB Rows returned: 1Duplicate queries: #12

SELECT *

FROM w0v41_extensions

WHERE `extension_id` = '10274'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL const PRIMARY PRIMARY 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 11 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1465 10 JDatabaseDriver->loadAssoc() JROOT/libraries/src/Table/Table.php:747 9 Joomla\CMS\Table\Table->load() JROOT/plugins/system/roksprocket/roksprocket.php:54 8 plgSystemRokSprocket->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.27 ms After last query: 0.06 ms Query memory: 0.005 MB Memory before query: 12.703 MB Rows returned: 1

SELECT `extension_id`

FROM `w0v41_extensions`

WHERE type = 'module'

AND element = 'mod_roksprocket'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL ref element_clientid,element_folder_clientid,extension extension 484 const,const 1 100.00 Using index No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 11 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 10 JDatabaseDriver->loadResult() JROOT/libraries/src/Table/Extension.php:124 9 Joomla\CMS\Table\Extension->find() JROOT/plugins/system/roksprocket/roksprocket.php:70 8 plgSystemRokSprocket->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.25 ms After last query: 0.06 ms Query memory: 0.005 MB Memory before query: 12.709 MB Rows returned: 1Duplicate queries: #10

SELECT *

FROM w0v41_extensions

WHERE `extension_id` = '10274'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL const PRIMARY PRIMARY 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 11 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1465 10 JDatabaseDriver->loadAssoc() JROOT/libraries/src/Table/Table.php:747 9 Joomla\CMS\Table\Table->load() JROOT/plugins/system/roksprocket/roksprocket.php:76 8 plgSystemRokSprocket->onAfterInitialise() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:668 4 Joomla\CMS\Application\CMSApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:686 3 Joomla\CMS\Application\SiteApplication->initialiseApp() JROOT/libraries/src/Application/SiteApplication.php:212 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.97 ms After last query: 162.96 ms Query memory: 0.006 MB Memory before query: 16.895 MB

UPDATE `w0v41_extensions`

SET `params` = '{\"mediaversion\":\"7f1ae81fc6cd8fbd98f426e110f2dc1b\"}'

WHERE `type` = 'library'

AND `element` = 'joomla'EXPLAIN not possible on query: UPDATE `w0v41_extensions` SET `params` = '{\"mediaversion\":\"7f1ae81fc6cd8fbd98f426e110f2dc1b\"}' WHERE `type` = 'library' AND `element` = 'joomla'No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 14 JDatabaseDriverMysqli->execute() JROOT/libraries/src/Helper/LibraryHelper.php:117 13 Joomla\CMS\Helper\LibraryHelper::saveParams() JROOT/libraries/src/Version.php:372 12 Joomla\CMS\Version->setMediaVersion() JROOT/libraries/src/Version.php:331 11 Joomla\CMS\Version->getMediaVersion() JROOT/libraries/src/Factory.php:778 10 Joomla\CMS\Factory::createDocument() JROOT/libraries/src/Factory.php:234 9 Joomla\CMS\Factory::getDocument() JROOT/plugins/system/k2/k2.php:374 8 plgSystemK2->onAfterRoute() JROOT/libraries/joomla/event/event.php:70 7 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 6 JEventDispatcher->trigger() JROOT/libraries/src/Application/BaseApplication.php:108 5 Joomla\CMS\Application\BaseApplication->triggerEvent() JROOT/libraries/src/Application/CMSApplication.php:1190 4 Joomla\CMS\Application\CMSApplication->route() JROOT/libraries/src/Application/SiteApplication.php:796 3 Joomla\CMS\Application\SiteApplication->route() JROOT/libraries/src/Application/SiteApplication.php:218 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 1.09 ms After last query: 14.61 ms Query memory: 0.006 MB Memory before query: 17.692 MB Rows returned: 60

SELECT `id`,`name`,`rules`,`parent_id`

FROM `w0v41_assets`

WHERE `name` IN ('root.1','com_actionlogs','com_acym','com_acymailing','com_admin','com_adminmenumanager','com_advportfoliopro','com_ajax','com_akeeba','com_associations','com_banners','com_bdthemes_shortcodes','com_cache','com_categories','com_checkin','com_cjlib','com_config','com_contact','com_content','com_contenthistory','com_cpanel','com_eventgallery','com_extplorer','com_fields','com_finder','com_installer','com_jce','com_jmap','com_joominapayments','com_joomlaupdate','com_k2','com_languages','com_login','com_mailto','com_media','com_menus','com_messages','com_modules','com_newsfeeds','com_plugins','com_postinstall','com_privacy','com_profiles','com_redirect','com_roksprocket','com_rsform','com_search','com_sigpro','com_smartcountdown3','com_speventum','com_splms','com_spmedical','com_sppagebuilder','com_spsimpleportfolio','com_tags','com_teamchart','com_templates','com_tinypayment','com_uniterevolution2','com_users','com_wrapper')id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_assets NULL range idx_asset_name idx_asset_name 202 NULL 61 100.00 Using index condition No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 13 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 12 JDatabaseDriver->loadObjectList() JROOT/libraries/src/Access/Access.php:429 11 Joomla\CMS\Access\Access::preloadComponents() JROOT/libraries/src/Access/Access.php:213 10 Joomla\CMS\Access\Access::preload() JROOT/libraries/src/Access/Access.php:531 9 Joomla\CMS\Access\Access::getAssetRules() JROOT/libraries/src/Access/Access.php:183 8 Joomla\CMS\Access\Access::check() JROOT/libraries/src/User/User.php:398 7 Joomla\CMS\User\User->authorise() JROOT/components/com_k2/k2.php:15 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.39 ms After last query: 20.40 ms Query memory: 0.009 MB Memory before query: 19.166 MB Rows returned: 1

SELECT enabled

FROM w0v41_extensions

WHERE element = 'com_sppagebuilder'

AND type = 'component'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL ref element_clientid,element_folder_clientid,extension element_clientid 402 const 1 10.00 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 18 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 17 JDatabaseDriver->loadResult() JROOT/plugins/content/sppagebuilder/sppagebuilder.php:172 16 PlgContentSppagebuilder->isSppagebuilderEnabled() JROOT/plugins/content/sppagebuilder/sppagebuilder.php:36 15 PlgContentSppagebuilder->__construct() JROOT/libraries/src/Plugin/PluginHelper.php:280 14 Joomla\CMS\Plugin\PluginHelper::import() JROOT/libraries/src/Plugin/PluginHelper.php:182 13 Joomla\CMS\Plugin\PluginHelper::importPlugin() JROOT/components/com_k2/views/itemlist/view.html.php:65 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.66 ms After last query: 5.01 ms Query memory: 0.007 MB Memory before query: 19.473 MB Rows returned: 0

SELECT id

FROM w0v41_k2_categories

WHERE parent IN(2,3)

AND id NOT IN(2,3)

AND published=1

AND trash=0

AND access IN(1,1,5)

AND language IN('en-GB', '*')id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_k2_categories NULL range PRIMARY,category,parent,published,access,trash,language,idx_category PRIMARY 4 NULL 13 7.14 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 16 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1550 15 JDatabaseDriver->loadColumn() JROOT/components/com_k2/models/itemlist.php:412 14 K2ModelItemlist->getCategoryTree() JROOT/components/com_k2/models/itemlist.php:257 13 K2ModelItemlist->getData() JROOT/components/com_k2/views/itemlist/view.html.php:568 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 2.12 ms After last query: 0.07 ms Query memory: 0.008 MB Memory before query: 19.484 MB Rows returned: 5

/* Frontend / K2 / Items */ SELECT /*+ MAX_EXECUTION_TIME(60000) */ SQL_CALC_FOUND_ROWS i.*, c.name AS categoryname, c.id AS categoryid, c.alias AS categoryalias, c.params AS categoryparams

FROM w0v41_k2_items AS i

INNER JOIN w0v41_k2_categories AS c USE INDEX (idx_category)

ON c.id = i.catid

WHERE i.published = 1

AND i.access IN(1,5)

AND i.trash = 0

AND c.published = 1

AND c.access IN(1,5)

AND c.trash = 0

AND c.language IN('en-GB', '*')

AND i.language IN('en-GB', '*')

AND (i.publish_up = '0000-00-00 00:00:00' OR i.publish_up <= '2025-07-15 00:43:58')

AND (i.publish_down = '0000-00-00 00:00:00' OR i.publish_down >= '2025-07-15 00:43:58')

AND c.id IN(2,3)

ORDER BY i.id DESC

LIMIT 0, 7EXPLAIN not possible on query: /* Frontend / K2 / Items */ SELECT /*+ MAX_EXECUTION_TIME(60000) */ SQL_CALC_FOUND_ROWS i.*, c.name AS categoryname, c.id AS categoryid, c.alias AS categoryalias, c.params AS categoryparams FROM w0v41_k2_items AS i INNER JOIN w0v41_k2_categories AS c USE INDEX (idx_category) ON c.id = i.catid WHERE i.published = 1 AND i.access IN(1,5) AND i.trash = 0 AND c.published = 1 AND c.access IN(1,5) AND c.trash = 0 AND c.language IN('en-GB', '*') AND i.language IN('en-GB', '*') AND (i.publish_up = '0000-00-00 00:00:00' OR i.publish_up <= '2025-07-15 00:43:58') AND (i.publish_down = '0000-00-00 00:00:00' OR i.publish_down >= '2025-07-15 00:43:58') AND c.id IN(2,3) ORDER BY i.id DESC LIMIT 0, 7No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 15 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 14 JDatabaseDriver->loadObjectList() JROOT/components/com_k2/models/itemlist.php:352 13 K2ModelItemlist->getData() JROOT/components/com_k2/views/itemlist/view.html.php:568 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.17 ms After last query: 0.16 ms Query memory: 0.007 MB Memory before query: 19.715 MB Rows returned: 1

SELECT FOUND_ROWS();

id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE NULL NULL NULL NULL NO INDEX KEY COULD BE USED NULL NULL NULL NULL No tables used No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 15 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 14 JDatabaseDriver->loadResult() JROOT/components/com_k2/models/itemlist.php:365 13 K2ModelItemlist->getData() JROOT/components/com_k2/views/itemlist/view.html.php:568 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 1.30 ms After last query: 2.21 ms Query memory: 0.009 MB Memory before query: 19.835 MB Rows returned: 14

SHOW FULL COLUMNS

FROM `w0v41_k2_categories`EXPLAIN not possible on query: SHOW FULL COLUMNS FROM `w0v41_k2_categories`No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 19 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 18 JDatabaseDriver->loadObjectList() JROOT/libraries/joomla/database/driver/mysqli.php:448 17 JDatabaseDriverMysqli->getTableColumns() JROOT/libraries/src/Table/Table.php:261 16 Joomla\CMS\Table\Table->getFields() JROOT/libraries/src/Table/Table.php:180 15 Joomla\CMS\Table\Table->__construct() JROOT/administrator/components/com_k2/tables/k2category.php:34 14 TableK2Category->__construct() JROOT/libraries/src/Table/Table.php:328 13 Joomla\CMS\Table\Table::getInstance() JROOT/components/com_k2/views/itemlist/view.html.php:693 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.30 ms After last query: 1.10 ms Query memory: 0.009 MB Memory before query: 19.884 MB Rows returned: 1

SELECT *

FROM w0v41_k2_categories

WHERE id = '3'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_k2_categories NULL const PRIMARY PRIMARY 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 19 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1465 18 JDatabaseDriver->loadAssoc() JROOT/administrator/components/com_k2/tables/k2category.php:61 17 TableK2Category->load() JROOT/components/com_k2/models/item.php:65 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.24 ms After last query: 3.28 ms Query memory: 0.012 MB Memory before query: 19.928 MB Rows returned: 1

SELECT id, alias, parent

FROM w0v41_k2_categories

WHERE published = 1

AND id = 3id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_k2_categories NULL const PRIMARY,category,published,idx_category PRIMARY 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 28 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1662 27 JDatabaseDriver->loadObject() JROOT/components/com_k2/router.php:493 26 getCategoryProps() JROOT/components/com_k2/router.php:501 25 getCategoryPath() JROOT/components/com_k2/router.php:205 24 k2BuildRoute() JROOT/libraries/src/Component/Router/RouterLegacy.php:69 23 Joomla\CMS\Component\Router\RouterLegacy->build() JROOT/libraries/src/Router/SiteRouter.php:532 22 Joomla\CMS\Router\SiteRouter->buildSefRoute() JROOT/libraries/src/Router/SiteRouter.php:502 21 Joomla\CMS\Router\SiteRouter->_buildSefRoute() JROOT/libraries/src/Router/Router.php:281 20 Joomla\CMS\Router\Router->build() JROOT/libraries/src/Router/SiteRouter.php:155 19 Joomla\CMS\Router\SiteRouter->build() JROOT/libraries/src/Router/Route.php:144 18 Joomla\CMS\Router\Route::link() JROOT/libraries/src/Router/Route.php:93 17 Joomla\CMS\Router\Route::_() JROOT/components/com_k2/models/item.php:68 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.46 ms After last query: 2.36 ms Query memory: 0.009 MB Memory before query: 19.993 MB Rows returned: 1

SELECT tag.*

FROM w0v41_k2_tags AS tag JOIN w0v41_k2_tags_xref AS xref

ON tag.id = xref.tagID

WHERE tag.published = 1

AND xref.itemID = 13

ORDER BY xref.id ASCid select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE xref NULL ref tagID,itemID itemID 4 const 1 100.00 NULL 1 SIMPLE tag NULL eq_ref PRIMARY,published PRIMARY 4 h88876_SDAT.xref.tagID 1 100.00 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 19 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 18 JDatabaseDriver->loadObjectList() JROOT/components/com_k2/models/item.php:1201 17 K2ModelItem->getItemTags() JROOT/components/com_k2/models/item.php:111 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 1.20 ms After last query: 2.86 ms Query memory: 0.012 MB Memory before query: 20.070 MB Rows returned: 17

SHOW FULL COLUMNS

FROM `w0v41_users`EXPLAIN not possible on query: SHOW FULL COLUMNS FROM `w0v41_users`No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 28 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 27 JDatabaseDriver->loadObjectList() JROOT/libraries/joomla/database/driver/mysqli.php:448 26 JDatabaseDriverMysqli->getTableColumns() JROOT/libraries/src/Table/Table.php:261 25 Joomla\CMS\Table\Table->getFields() JROOT/libraries/src/Table/Table.php:180 24 Joomla\CMS\Table\Table->__construct() JROOT/libraries/src/Table/User.php:41 23 Joomla\CMS\Table\User->__construct() JROOT/libraries/src/Table/Table.php:328 22 Joomla\CMS\Table\Table::getInstance() JROOT/libraries/src/User/User.php:603 21 Joomla\CMS\User\User::getTable() JROOT/libraries/src/User/User.php:877 20 Joomla\CMS\User\User->load() JROOT/libraries/src/User/User.php:248 19 Joomla\CMS\User\User->__construct() JROOT/libraries/src/User/User.php:301 18 Joomla\CMS\User\User::getInstance() JROOT/libraries/src/Factory.php:266 17 Joomla\CMS\Factory::getUser() JROOT/components/com_k2/models/item.php:208 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.49 ms After last query: 0.20 ms Query memory: 0.010 MB Memory before query: 20.109 MB Rows returned: 1

SELECT *

FROM `w0v41_users`

WHERE `id` = 111id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_users NULL const PRIMARY PRIMARY 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 23 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1465 22 JDatabaseDriver->loadAssoc() JROOT/libraries/src/Table/User.php:87 21 Joomla\CMS\Table\User->load() JROOT/libraries/src/User/User.php:880 20 Joomla\CMS\User\User->load() JROOT/libraries/src/User/User.php:248 19 Joomla\CMS\User\User->__construct() JROOT/libraries/src/User/User.php:301 18 Joomla\CMS\User\User::getInstance() JROOT/libraries/src/Factory.php:266 17 Joomla\CMS\Factory::getUser() JROOT/components/com_k2/models/item.php:208 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.91 ms After last query: 4.21 ms Query memory: 0.010 MB Memory before query: 20.865 MB Rows returned: 3

SELECT `g`.`id`,`g`.`title`

FROM `w0v41_usergroups` AS g

INNER JOIN `w0v41_user_usergroup_map` AS m

ON m.group_id = g.id

WHERE `m`.`user_id` = 111id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE m NULL ref PRIMARY PRIMARY 4 const 3 100.00 Using index 1 SIMPLE g NULL eq_ref PRIMARY PRIMARY 4 h88876_SDAT.m.group_id 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 23 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1506 22 JDatabaseDriver->loadAssocList() JROOT/libraries/src/Table/User.php:112 21 Joomla\CMS\Table\User->load() JROOT/libraries/src/User/User.php:880 20 Joomla\CMS\User\User->load() JROOT/libraries/src/User/User.php:248 19 Joomla\CMS\User\User->__construct() JROOT/libraries/src/User/User.php:301 18 Joomla\CMS\User\User::getInstance() JROOT/libraries/src/Factory.php:266 17 Joomla\CMS\Factory::getUser() JROOT/components/com_k2/models/item.php:208 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.81 ms After last query: 0.97 ms Query memory: 0.009 MB Memory before query: 20.873 MB Rows returned: 1

SELECT id, gender, description, image, url, `

group`, plugins

FROM w0v41_k2_users

WHERE userID=111id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_k2_users NULL ref userID userID 4 const 1 100.00 NULL No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 20 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1662 19 JDatabaseDriver->loadObject() JROOT/components/com_k2/models/item.php:1681 18 K2ModelItem->getUserProfile() JROOT/components/com_k2/helpers/utilities.php:45 17 K2HelperUtilities::getAvatar() JROOT/components/com_k2/models/item.php:211 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 2.93 ms After last query: 16.07 ms Query memory: 0.010 MB Memory before query: 21.466 MB Rows returned: 0

SELECT DISTINCT a.id, a.title, a.name, a.checked_out, a.checked_out_time, a.note, a.state, a.access, a.created_time, a.created_user_id, a.ordering, a.language, a.fieldparams, a.params, a.type, a.default_value, a.context, a.group_id, a.label, a.description, a.required,l.title AS language_title, l.image AS language_image,uc.name AS editor,ag.title AS access_level,ua.name AS author_name,g.title AS group_title, g.access as group_access, g.state AS group_state, g.note as group_note

FROM w0v41_fields AS a

LEFT JOIN `w0v41_languages` AS l

ON l.lang_code = a.language

LEFT JOIN w0v41_users AS uc

ON uc.id=a.checked_out

LEFT JOIN w0v41_viewlevels AS ag

ON ag.id = a.access

LEFT JOIN w0v41_users AS ua

ON ua.id = a.created_user_id

LEFT JOIN w0v41_fields_groups AS g

ON g.id = a.group_id

LEFT JOIN `w0v41_fields_categories` AS fc

ON fc.field_id = a.id

WHERE a.context = 'com_k2.itemlist'

AND (fc.category_id IS NULL OR fc.category_id IN (3,0))

AND a.access IN (1,1,5)

AND (a.group_id = 0 OR g.access IN (1,1,5))

AND a.state = 1

AND (a.group_id = 0 OR g.state = 1)

AND a.language in ('*','en-GB')

ORDER BY a.ordering ASCid select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE a NULL ref idx_state,idx_access,idx_context,idx_language idx_state 1 const 1 100.00 Using where; Using temporary; Using filesort 1 SIMPLE l NULL eq_ref idx_langcode idx_langcode 28 h88876_SDAT.a.language 1 100.00 Using index condition 1 SIMPLE uc NULL eq_ref PRIMARY PRIMARY 4 h88876_SDAT.a.checked_out 1 100.00 NULL 1 SIMPLE ag NULL eq_ref PRIMARY PRIMARY 4 h88876_SDAT.a.access 1 100.00 Using where 1 SIMPLE ua NULL eq_ref PRIMARY PRIMARY 4 h88876_SDAT.a.created_user_id 1 100.00 Using where 1 SIMPLE g NULL eq_ref PRIMARY PRIMARY 4 h88876_SDAT.a.group_id 1 100.00 Using where 1 SIMPLE fc NULL ref PRIMARY PRIMARY 4 h88876_SDAT.a.id 1 100.00 Using where; Using index; Distinct No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 22 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 21 JDatabaseDriver->loadObjectList() JROOT/libraries/src/MVC/Model/BaseDatabaseModel.php:322 20 Joomla\CMS\MVC\Model\BaseDatabaseModel->_getList() JROOT/administrator/components/com_fields/models/fields.php:333 19 FieldsModelFields->_getList() JROOT/libraries/src/MVC/Model/ListModel.php:194 18 Joomla\CMS\MVC\Model\ListModel->getItems() JROOT/administrator/components/com_fields/helpers/fields.php:136 17 FieldsHelper::getFields() JROOT/plugins/system/fields/fields.php:495 16 PlgSystemFields->onContentPrepare() JROOT/libraries/joomla/event/event.php:70 15 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 14 JEventDispatcher->trigger() JROOT/components/com_k2/models/item.php:667 13 K2ModelItem->execPlugins() JROOT/components/com_k2/views/itemlist/view.html.php:704 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.83 ms After last query: 15.82 ms Query memory: 0.009 MB Memory before query: 22.398 MB Rows returned: 1

SELECT enabled

FROM w0v41_extensions

WHERE element = 'com_sppagebuilder'

AND type = 'component'id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_extensions NULL ref element_clientid,element_folder_clientid,extension element_clientid 402 const 1 10.00 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 19 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1740 18 JDatabaseDriver->loadResult() JROOT/plugins/k2/sppagebuilder/sppagebuilder.php:99 17 plgK2Sppagebuilder->isSppagebuilderEnabled() JROOT/plugins/k2/sppagebuilder/sppagebuilder.php:84 16 plgK2Sppagebuilder->onK2PrepareContent() JROOT/libraries/joomla/event/event.php:70 15 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 14 JEventDispatcher->trigger() JROOT/components/com_k2/models/item.php:792 13 K2ModelItem->execPlugins() JROOT/components/com_k2/views/itemlist/view.html.php:704 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 4.66 ms After last query: 0.27 ms Query memory: 0.009 MB Memory before query: 22.412 MB Rows returned: 0Duplicate queries: #30

SELECT *

FROM `w0v41_sppagebuilder`

WHERE `extension` = 'com_k2'

AND `extension_view` = 'itemlist'

AND `view_id` = '13'

AND `active` = 1id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_sppagebuilder NULL ALL NULL NO INDEX KEY COULD BE USED NULL NULL 131 0.76 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 20 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1662 19 JDatabaseDriver->loadObject() JROOT/administrator/components/com_sppagebuilder/helpers/sppagebuilder.php:291 18 SppagebuilderHelper::getPageContent() JROOT/administrator/components/com_sppagebuilder/helpers/sppagebuilder.php:248 17 SppagebuilderHelper::onIntegrationPrepareContent() JROOT/plugins/k2/sppagebuilder/sppagebuilder.php:87 16 plgK2Sppagebuilder->onK2PrepareContent() JROOT/libraries/joomla/event/event.php:70 15 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 14 JEventDispatcher->trigger() JROOT/components/com_k2/models/item.php:792 13 K2ModelItem->execPlugins() JROOT/components/com_k2/views/itemlist/view.html.php:704 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 1.77 ms After last query: 0.08 ms Query memory: 0.009 MB Memory before query: 22.421 MB Rows returned: 0Duplicate queries: #29

SELECT *

FROM `w0v41_sppagebuilder`

WHERE `extension` = 'com_k2'

AND `extension_view` = 'itemlist'

AND `view_id` = '13'

AND `active` = 1id select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE w0v41_sppagebuilder NULL ALL NULL NO INDEX KEY COULD BE USED NULL NULL 131 0.76 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 20 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1662 19 JDatabaseDriver->loadObject() JROOT/administrator/components/com_sppagebuilder/helpers/sppagebuilder.php:291 18 SppagebuilderHelper::getPageContent() JROOT/administrator/components/com_sppagebuilder/helpers/sppagebuilder.php:248 17 SppagebuilderHelper::onIntegrationPrepareContent() JROOT/plugins/k2/sppagebuilder/sppagebuilder.php:89 16 plgK2Sppagebuilder->onK2PrepareContent() JROOT/libraries/joomla/event/event.php:70 15 JEvent->update() JROOT/libraries/joomla/event/dispatcher.php:160 14 JEventDispatcher->trigger() JROOT/components/com_k2/models/item.php:792 13 K2ModelItem->execPlugins() JROOT/components/com_k2/views/itemlist/view.html.php:704 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.76 ms After last query: 2.29 ms Query memory: 0.009 MB Memory before query: 22.501 MB Rows returned: 5

SELECT tag.*

FROM w0v41_k2_tags AS tag JOIN w0v41_k2_tags_xref AS xref

ON tag.id = xref.tagID

WHERE tag.published = 1

AND xref.itemID = 12

ORDER BY xref.id ASCid select_type table partitions type possible_keys key key_len ref rows filtered Extra 1 SIMPLE xref NULL ref tagID,itemID itemID 4 const 5 100.00 NULL 1 SIMPLE tag NULL eq_ref PRIMARY,published PRIMARY 4 h88876_SDAT.xref.tagID 1 100.00 Using where No SHOW PROFILE (maybe because there are more than 100 queries)# Caller File and line number 19 JDatabaseDriverMysqli->execute() JROOT/libraries/joomla/database/driver.php:1701 18 JDatabaseDriver->loadObjectList() JROOT/components/com_k2/models/item.php:1201 17 K2ModelItem->getItemTags() JROOT/components/com_k2/models/item.php:111 16 K2ModelItem->prepareItem() Same as call in the line below. 15 call_user_func_array() JROOT/libraries/src/Cache/Controller/CallbackController.php:173 14 Joomla\CMS\Cache\Controller\CallbackController->get() JROOT/libraries/src/Cache/Controller/CallbackController.php:45 13 Joomla\CMS\Cache\Controller\CallbackController->call() JROOT/components/com_k2/views/itemlist/view.html.php:695 12 K2ViewItemlist->display() JROOT/libraries/src/Cache/Controller/ViewController.php:102 11 Joomla\CMS\Cache\Controller\ViewController->get() JROOT/libraries/src/MVC/Controller/BaseController.php:655 10 Joomla\CMS\MVC\Controller\BaseController->display() JROOT/components/com_k2/controllers/controller.php:20 9 K2Controller->display() JROOT/components/com_k2/controllers/itemlist.php:50 8 K2ControllerItemlist->display() JROOT/libraries/src/MVC/Controller/BaseController.php:702 7 Joomla\CMS\MVC\Controller\BaseController->execute() JROOT/components/com_k2/k2.php:57 6 require_once JROOT/components/com_k2/k2.php JROOT/libraries/src/Component/ComponentHelper.php:402 5 Joomla\CMS\Component\ComponentHelper::executeComponent() JROOT/libraries/src/Component/ComponentHelper.php:377 4 Joomla\CMS\Component\ComponentHelper::renderComponent() JROOT/libraries/src/Application/SiteApplication.php:194 3 Joomla\CMS\Application\SiteApplication->dispatch() JROOT/libraries/src/Application/SiteApplication.php:233 2 Joomla\CMS\Application\SiteApplication->doExecute() JROOT/libraries/src/Application/CMSApplication.php:225 1 Joomla\CMS\Application\CMSApplication->execute() JROOT/index.php:49 - Query Time: 0.51 ms After last query: 10.05 ms Query memory: 0.009 MB Memory before query: 22.491 MB Rows returned: 1

SELECT enabled

FROM w0v41_extensions

WHERE element = 'com_sppagebuilder'